Secure, Privacy-preserving Artificial Intelligence

The amount of (personal) data collected as well as the number of digital interactions are growing at a staggering rate. At the same time, data breaches and data privacy violations are becoming somewhat commonplace.

As such, it is most critical to place a greater emphasis on data safety and data privacy during data acquisition as well as the various stages of data handling, in particular during computation (e.g., data analysis and machine learning).

While there are a few domains (e.g., healthcare and finance) that have had strong legal data privacy guidelines for many years, the data privacy landscape at large is now undergoing significant changes:

- Regulation is spreading in full force; from the EU’s GDPR to China’s updated Information security technology – personal information security specification to California’s upcoming Consumer Privacy Act and much more.

- Domain specific guidelines are being developed for responsible usage of AI; e.g., MAS’ Principles to Promote Fairness, Ethics, Accountability and Transparency (FEAT) in the Use of AI and Data Analytics in Singapore’s financial sector.

- Executives of the top corporations are speaking up for better data privacy regimes; e.g., refer to Sundar Pichai’s NY Times article on ‘Privacy Should Not Be a Luxury Good’ or Apple’s and Microsoft’s declarations that privacy (in the digital realm) should be a fundamental human right.

However, those are (by far) not the only drivers. There are also broader benefits that come with adopting approaches and technologies to utilise the collective wisdom of peers and competitors, without giving up sensitive data or trade secrets; without violating laws or regulatory requirements; and, without enabling the competition (e.g., having access to a diverse enough dataset to help doctors diagnose difficult problems or choose better treatments).

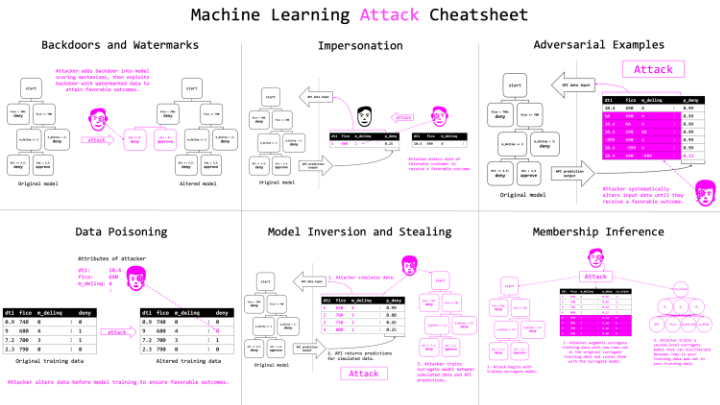

Still, prevailing machine (espc. deep) learning approaches are data hungry (→ increased attack surface), expose hidden utility of data (→ unexpected insights and predictions, e.g., due to mosaic effect), and generate models that potentially expose memorized information from (underlying training) data sets (→ statistical / membership inference attacks, adversarial attacks or model extraction attacks).

Privacy-preserving approaches are nothing new; on the contrary, they have been researched and developed for decades in areas such as information security, communication security, secure / hippocratic databases, and trusted computing. Prevailing techniques include trusted execution environments, traditional encryption, homomorphic encryption, (secure) multi-party computation, federated learning, differential privacy, and distributed ledger technologies.

Based on one or more of those techniques, several privacy-preserving libraries / frameworks for machine learning have emerged over the past 18 months; notable efforts include:

- Gazelle (homomorphic encryption and two-party computation techniques such as garbled circuits);

- TF Encrypted (homomorphic encryption and multi-party computation), TF Privacy (differential privacy) and TF Federated (federated learning); and

- OpenMined PySyft and Grid (federated learning, differential privacy, homomorphic encryption and multi-party computation).

(For a more comprehensive summary, please refer to Jason Mancuso, Ben DeCoste and Gavin Uhma: “Privacy-Preserving Machine Learning 2018: A Year in Review”.)

The team at Wismut Labs has been following the OpenMined efforts closely since early September 2018, and we have decided to dedicate some of our internal resources to help grow a corresponding Singapore community that is focused on researching, developing, and elevating tools for secure, privacy-preserving, value-aligned artificial intelligence.

As such, we are sponsoring and co-organising the OpenMined Singapore Meetup Group and their events; with the inaugural event having attracted over 25 participants. Slides used during the event can be found below.